Post 004 - Performance Metrics

From Post 003,

During the fast.ai lession from Jeremy Howard, he mentioned some explanations of the confusion matrix. Depending on the metrics used to train the model, the confusion matrix could show how or what category errors were made in the model. Fast.ai has a built-in function to generate a simple confusion matrix.

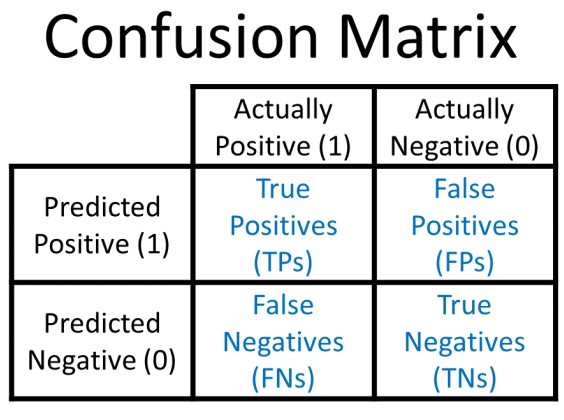

The confusion matrix details can also be represented as true positives (TP), true negatives (TN), false positive (FP), and false negatives (FN).

If I covert the confusion matrix to the following format:

I could use those details to visualise the performance of the model. Here are some mathematical formulas I learned to translate these details into the performance metrics:

Calculate the accuracy metric of the model:

Accuracy = (TP + TN) / (TP + TN + FP + FN)

Calculate the precision metric of the model:

Precision = TP / (TP + FP)

Calculate the recall metric of the model:

Recall = TP / (TP + FN)